Pickle Rick

Enumeration

The first step in the enumeration that I do is always enumerating the box with Nmap. The command that I used is: nmap -sC -V -oN nmap-script <ip> -p- I always use the flag -oN to save the Nmap result for later review. The rest Nmap gave me was:

┌──(kali㉿kali)-[192.168.213.128]-[13:48:38 12/09/2024]-[~/thm/pickle-rick]

└─$ nmap -sC -sV -oN snmap-scan [ip] -p-

# Nmap 7.94SVN scan initiated Thu Sep 12 13:06:31 2024 as: nmap -sC -sV -oN nmap-scan 10.10.96.74

Nmap scan report for 10.10.96.74

Host is up (0.023s latency).

Not shown: 998 closed tcp ports (conn-refused)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.11 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 b7:33:d5:d9:db:e0:81:a9:f8:5d:a4:4f:36:16:a3:4a (RSA)

| 256 6a:e2:88:84:d1:1a:b0:8b:bd:e3:de:20:99:e9:bb:f8 (ECDSA)

|_ 256 15:ba:da:57:13:f0:27:8b:0a:ab:c5:f2:1e:16:41:21 (ED25519)

80/tcp open http Apache httpd 2.4.41 ((Ubuntu))

|_http-title: Rick is sup4r cool

|_http-server-header: Apache/2.4.41 (Ubuntu)

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Thu Sep 12 13:06:39 2024 -- 1 IP address (1 host up) scanned in 7.96 seconds

So now we have two services: SSH and HTTP. At this point, I know that I can't use SSH because I do not have any credentials or SSH keys, so it's better not to even try it. Let's go with the HTTP service. The best way is to paste the IP address into a browser to check the website.

It is a simple page with a description and an image. At first glance, it seems nothing is interesting. From here we can do a few things to enumerate the website:

- check source code

- check cookies

- check eventual javascript files

- monitor the communication with BurpSuite

- directory brute forcing

- the 6th idea came into my mind later, not immediately. I will discuss it later in the writeup

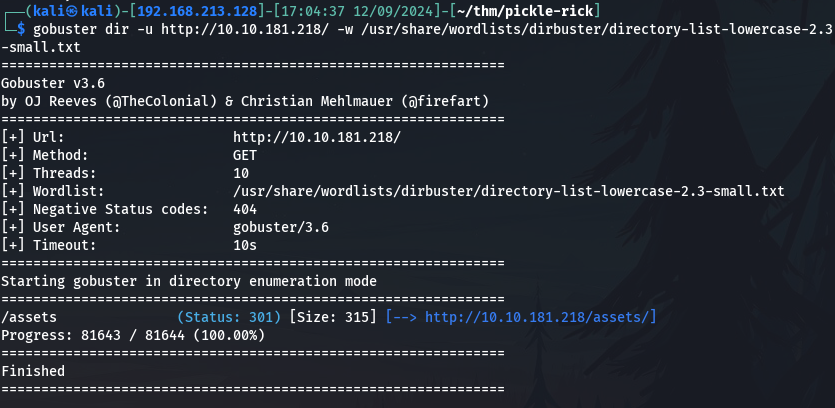

To be more efficient I run first of all directory brute forcing with gobuster:

gobuster dir -u http://10.10.181.218/ -w /usr/share/wordlists/dirbuster/directory-list-lowercase-2.3-small.txt

And the rest was the discovery of the /asset directory path, which was very disappointing.

I will get back to this result later.

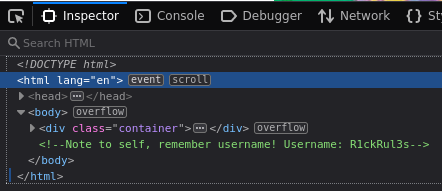

While gobuster was running I was assessing the website manually (points 1, 2 and 3 previously mentioned). Checking the source code I found a comment exposing the user name.

Easy peasy, I got the username!

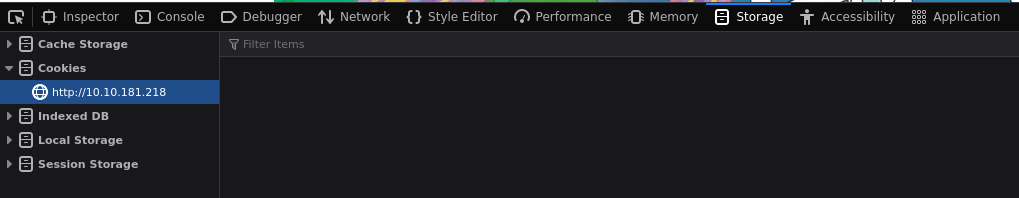

The first step is done! The second step is checking the cookies. But the site has no cookies.

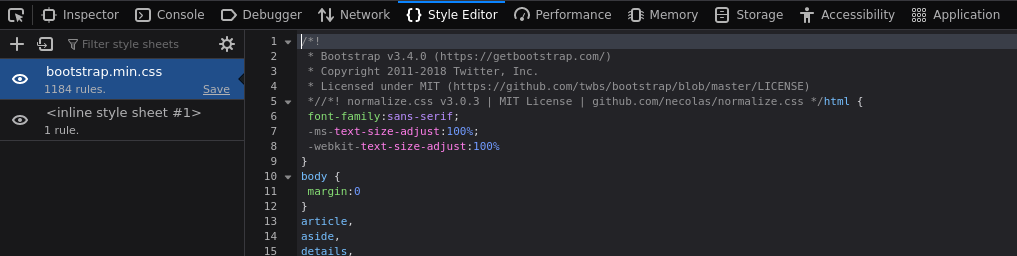

The third step is to check any javascript files. But there were only JS libraries used to create the website, the standard bootstrap JS files, nothing interesting.

At this point I started to lose hope, how can an easy box put me in such a difficult position?

In the meantime, BurpSuite logged a few interactions with the website but I did not find anything interesting. I was hoping to find some GET and POST parameters like user or admin parameter to elevate my privilege but no such thing was implemented.

I even tried manually to access some paths as you can see from the screenshot such as

- /management

- /panel

- /apache

- /console

And none of them worked. At this moment I remembered about robots.txt the 6th point that was missing from the above list. The robots.txt disclosed an alphabetic string: Wubbalubbadubdub. As I found the username earlier maybe this could be the password, but I didn't give it for granted it can be a rabbit hole.

Having these 2 findings, the username and a possible password I started to play for almost 1 hour with the SSH service trying to log in. It gave me several error messages and I tried to fix them and eventually I gave up on SSH and went back to the website. The SSH service was a rabbit hole and it lost 1 hour of my time but it enhanced my ability to detect rabbit holes. It was not totally useless and I learnt a few things about SSH config files as well, who knows it may came in handy in future challenges.

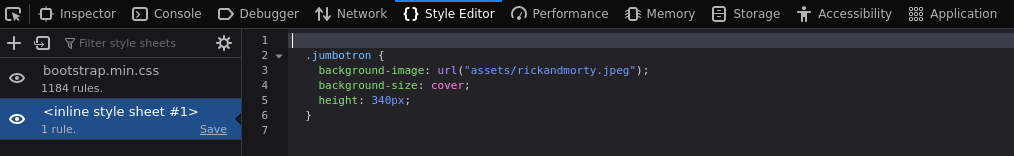

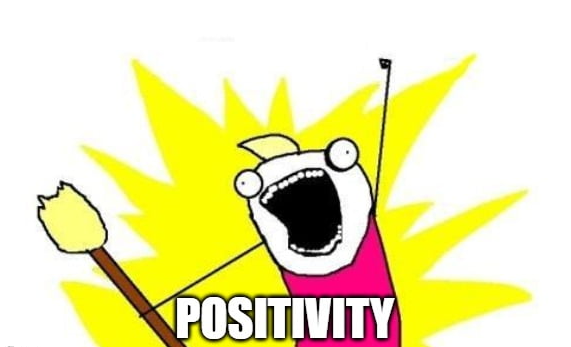

At this point, I checked the gobuster result and it shows /asset. By accessing it I can see some static files like image files, javascript and CSS.

I admit at this point I was tempted to take a peek at the solution but I resisted! So far I have checked all the 6 points listed. Given the number of images, I thought there may be some steganography challenge, so I downloaded a few images and I tried to see if the images had some information in string format inside the images with the command strings: strings <filename>; strings portal.jpg Also this attempt failed.

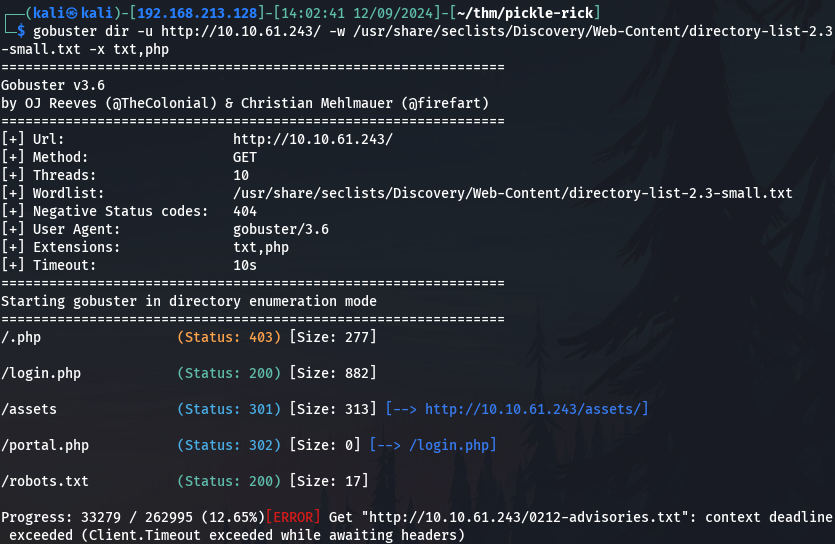

I searched on Google for web enumeration and read a few pages about people doing web enumeration of other challenges and I noticed they were using seclists wordlist. I immediately installed seclists by typing sudo apt install seclists. I ran Gobuster again, and while I was reading the available options, one in particular caught my eye, the -x that I used to use. This option looks for files ending with a certain extension. -x, --extensions string /t/t File extension(s) to search for. I returned to Gobuster with pretty much identical flags and the same wordlist this time from a different path and I added -x txt,php. Now why did I add .php? It was just an educated guess as most of the boxes that I played had PHP. I was also tempted to add more extensions such as .pdf, .js, and .css but they are not useful to me and they will certainly make it run slower. So the command I ran was:

gobuster dir -u http://10.10.61.243/ -w /usr/share/seclists/Discovery/Web-Content/directory-list-2.3-small.txt -x txt,php This time the result was very different. I did not let Gobuster go through the whole wordlist as it started to give me errors so I stopped it.

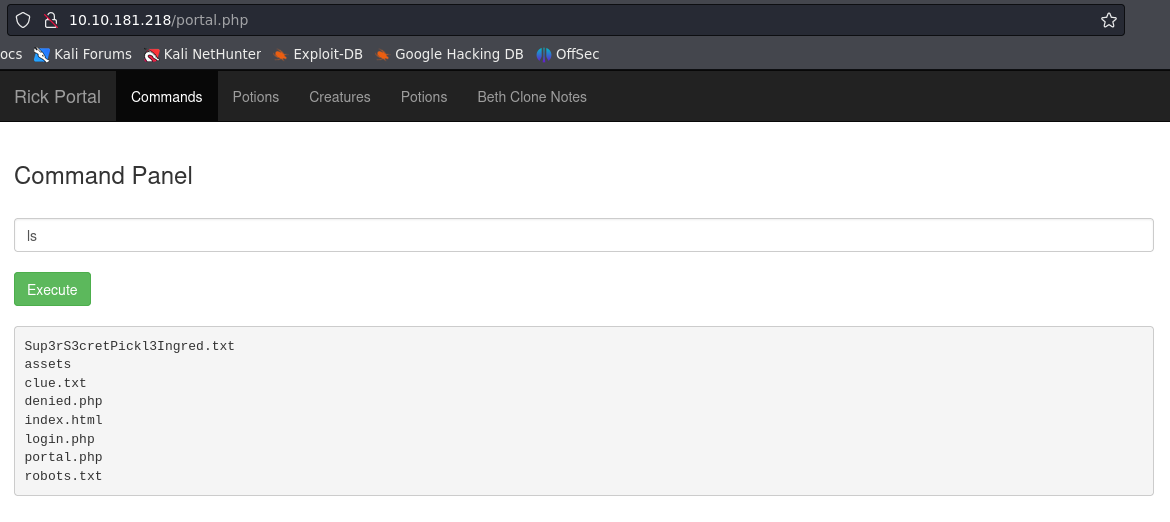

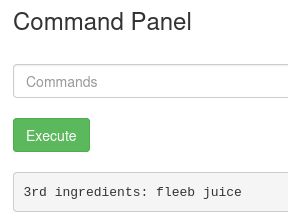

This new finding brought immediately my morale up! I tried portal.php and login.php both ended up in login.php. Inserting the username and the string Wubbalubbadubdub logged me into a page where I can run bash commands.

Restricted SHELL

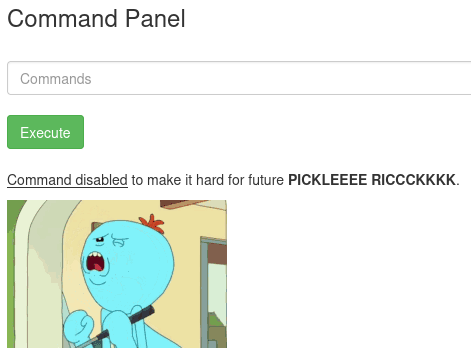

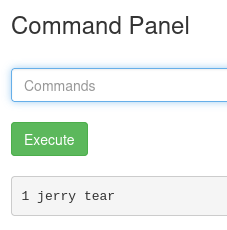

Now is the time to read the file and search for the ingredients. First thing is ls list the directory to see what files it contains and immediately I can see it has a file named Sup3rS3cretPickl3Ingred.txt. Trying to cat it I receive the following error message.

Damn! I can't use the cat command! Wait a minute... I know more commands to achieve similar results thanks to my experiences with Linux and they are:

- more

- less

- strings

- vim

- nano

- vi

Note that I listed 3 text editors as well, my idea was to try to open the file with one of the text editors to see the content. Among all the commands listed the command less worded for the first ingredient and later on I found out that the command strings works for all the ingredients so I will use the command strings.

The first ingredient is done now tome to look for the other two. After exploring the system I found out that the second ingredient is in Rick's home directory.

Let's try to read it with strings. Trying to read the file with the following command strings /home/rick/second ingredient gives me empty results.

How come? I know it! Thanks to a bash CTF that I have done when I started my journey in the Cybersecurity field I had to face a similar challenge. The CTF is called OverTheWire and the level was Bandit. The issue is with the space in the file name. By wrapping it with quotes it solves the issue. strings "/home/rick/second ingredients"

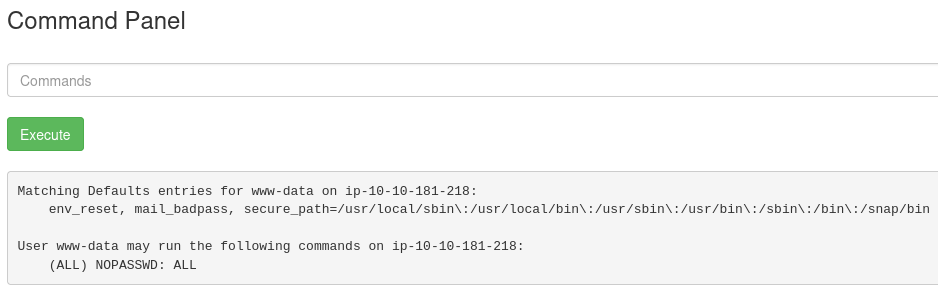

Now it's time to find the 3rd ingredient. After spending some time I tried to access the root folder but I could not. So as I used to do during privilege escalation in other boxes I ran sudo -l and surprisingly I had the following output.

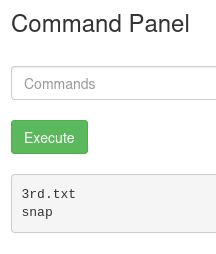

I can run any command as sudo! So the command that I have to run is sudo ls /root and I can see the name of the 3rd ingredient.

Now let's try to read it sudo strings /root/3rd.txt

And finally here is the 3rd ingredient.

Takeaway

During the challenge at a certain point, I thought I had to obtain a reverse shell and spent almost 2 hours trying to achieve it via the browser and BurpSuite. I tried various techniques, strategies and different encoding but they did not work. I also tried to upload a reverse shell which did not work as well.

During all these failed attepts I learned other things even if they were not related to the completion of the challenge. But for sure the time spent for on the failed attempts was not wasted at all. I took my time to assess the website thoroughly, I did my research without rushing it. When needed I took a peek at the solution as long as it helped me to learn. The key is patience. Also, I was able to finish the challenge very quickly once I took my lunch break, seems my mind cleared.